Event Tech Knowledgebase

Our guide for event organisers and production managers to simplify terminology, detail the pros and cons of different services and provide some example setups for different types of events. Click on the headings for a list of questions and answers or use the search box below.

CCTV (3)

The PTZ camera is a key component in many CCTV installations as it provides a flexible approach to covering large areas because of its ability to move around and zoom in. There is a wide range of PTZ cameras all offering different levels of specification and quality. Key factors include the level of optical zoom (18x – 24x tend to be the most useful), motion detection & tracking, resolution, framerate and what is known as ‘guard tour’ – the ability for the camera to automatically pan between a number of locations.

PTZ cameras also include features such as a heater to ‘defog’ their dome in the event of condensation build in and low-light/infra-red support.

CCTV has evolved rapidly over the last few years leaving quite a complex environment. Most analogue systems were classed as SD (Standard Definition) based on a comparison to the old broadcast television standard. Early digital systems used the same basic premise and operated at a resolution of 720 x 576 pixels. Next came HD (High Definition) running at 1280 x 720 pixels (known as 720p), followed by Full-HD with a resolution of 1920 x 1080 pixels (1080p). Some of the latest generation of cameras are now moving to QHD (Quad HD) with resolutions of 2560×1920.

Typically a good CCTV system will operate at least at HD and ideally Full-HD to provide the level of detail expected. There are many other factors though, such as the frame rate, compression and bit rate which all impact the overall image quality.

Static CCTV cameras are fixed position cameras with a single field of view. Being mechanically less complex than their PTZ counterparts they often provide higher resolution images, built-in infra-red support (for night vision) and increasingly features such as digital PTZ. More advanced static CCTV cameras offer auto-focus and an adjustable Field of View (FoV).

Most often they are used to monitor to specific areas such as entrances, bars, roads, box offices, etc. where having a continuous view is important. With additional software static CCTV cameras are also used for ANPR (Automatic Number Plate Recognition) and also for aspects of crowd density analysis.

Common Scenarios (4)

Video streaming from an event site has some very specific requirements and must be considered well in advance of the event to ensure success. The first question is the level of quality required for the stream which may be SD (Standard Definition), HD (High Definition – 720p), Full-HD (High Definition – 1080p), 2K (1440p) or 4K (2160p). In simple terms the higher the quality the higher the bit-rate required, typically ranging from around 2Mbps at the low end up to over 45Mbps at the top end, possibly even higher for frame rates above 30fps!

The key point is that the bandwidth capacity is required on upload rather than download so it is essential the upload speed is checked as many services quote the headline download speed missing out the all important upload speed which may be considerably slowly. If a two-way video stream is proposed then synchronous bandwidth is essential. Alongside the upload speed, the quality of the connection is also very important. Streaming requires a very stable connection with low jitter and no packet loss.

The latency of the connection is generally only an issue if there is a two-way stream which means for uni-directional streaming satellite is an option. Low-grade ADSL connections do not have the upload capacity for streaming, however, a good quality FTTC service may be OK. Mobile 3G/4G connections, particularly when aggregated, can be OK in low-density environments but there are significant risks in an event environment where the arrival of the audience may well overloads the available capacity causing the stream to fail. For the best quality and reliability, a dedicated point-to-point link or optic fibre connection is the best solution.

In addition to the connectivity, it is also important to understand who is doing the encoding of the stream and configuring the distribution service such as YouTube or Ustream.

Public Wi-Fi internet access is often requested for event sites where mobile coverage is poor or has limited capacity, especially when the attendee numbers are likely to be in thousands. With current technology delivering a good quality W-Fi experience is achievable but there are some factors to consider:

- Backhaul connectivity – The actual connection to the internet is probably the most important success factor. With a large number of concurrent users there must be sufficient connectivity to ensure a good user experience and typically the cost of delivering this connectivity makes up a large proportion of the overall cost. Skimping on connectivity always leads to a poor experience and complaints that “it didn’t work”. From years of experience, we have built up a collection of models to calculate the likely level of connectivity required.

- Wi-Fi Density & Coverage – For a good user experience for thousands of users a significant number of wireless access points are needed to ensure appropriate coverage and density. This requires good planning and design to ensure that they do not interfere with each other. High-end professional access points can support up to several hundred users per unit depending on the type of usage compared to less than 50 on a cheap low-end product.

- Installation – Deploying a public network requires coordination across a number of areas, this is particularly important if the deployment is in a truly public space such as a city centre. Physical aspects of mounting, cabling (or Point-to-Point links), power, security and health & safety all need to be considered.

Alongside these aspects consideration also needs to be given to the method of access which may be totally open, perhaps with a terms and conditions “hi-jack/splash page” or it may be secured with some form of registration or code. The landing page may also be sponsored or branded to promote a product or event. Although ‘pay for access’ public networks can be deployed we do not typically recommend this for temporary events as users do not like this approach and take-up is generally very low.

Keeping a production team connected is a primary task and usually consists of a providing a good internet connection, Wi-Fi, VoIP (Phones), wired connections and associated IT support. Internet connectivity may be delivered via one or more services such as satellite, 3G/4G, ADSL, FTTC, wireless or fibre depending on requirements and budget. User access control is normally provided to control users, often coupled with rate shaping and prioritisation to ensure the production users always have good access.

Services are normally deployed when the production team arrive (“First Day Service”) and last until the team leave site (“Last Day Service”). Depending on the size of the event additional services may be deployed during the build as the team and site grows. For big sites multiple locations may be connected using different technologies including cabling, wireless links and optical fibre.

Mobile application launch events can place high demands on a temporary network depending on the type of application. Often for a native smartphone application the size of install may be over 100MB and with a large audience all trying to download simultaneously, this can create a high demand on the internet capacity available. Prior to the event it is important to understand the application size and the likely audience so that calculations can be done to estimate the required capacity. This information can then be used to ensure there is enough internet capacity available and that the Wi-Fi network deployed is suitable.If the attendees are also going to use the application at the event there may also be considerations around latency which often rules out the use of satellite.

If the attendees are also going to use the application at the event there may also be considerations around latency which may rule out the use of satellite as a form of connectivity. Occasionally non-standard protocols may also be used by applications and these need to be checked before the event to ensure there are no firewalls that are likely to block the connection. This is particularly important if a mobile network is used for connectivity as they often use transparent proxies which can cause unexpected effects.

In more advanced situations local caching can be used at the event site to reduce the demand on the internet connection, however, this is a relatively complex area and needs close coordination with the application developers.

Connectivity (12)

Internet connections have to send information in two directions – sending requests from clients up the internet and then downloading the response. Many internet connections such as ADSL and satellite are asynchronous, which means their upload and download speeds are different. For example a standard ADSL connection may have a download speed of 18Mbps but the upload speed will only be around 0.75Mbps. For events in most cases it is important to have synchronous connections, where the upload and download speeds are the same, because these days there is just as much requirement for uploading as there is for downloading. Often if an asynchronous connection is used the upload speed will rapidly become a bottleneck impacting performance for everyone.

The speed of internet connections is specified in ‘megabits’ or Mbps so you often hear people saying things like ‘it is a 20Mbps connection’. The headline speed is only one factor in the actual user experience and another big factor is the ‘contention ratio’. In simple terms this refers to how much the internet connection is shared with other customers. A typical domestic broadband connection for example often has a contention ratio of around 50:1 meaning there can be up to 50 other users trying to share the bandwidth. Business connections are often 10:1 and a true dedicated link such as a 100Mbps fibre is 1:1 or uncontended. For events it is important to have a low contention ratio otherwise the actual speed of connection available may be much less than expected.

It’s all too easy for one user to hog lots of bandwidth by streaming videos and downloading huge files so rate limiting is a technique used to ‘throttle’ an individual user (or sometimes groups of users) to ensure they can only use a certain amount of bandwidth. In it’s more advanced form the user may only be restricted if the connection is at capacity, allowing the user more capacity when the network is less utilised. By doing this the overall experience for all users is maintained.

At some sites it is not possible to have one large internet connection so instead several connections may be aggregated. There are two main approaches to aggregation – load balancing and bonding. Load balancing, as the name suggests, is an approach where traffic is split over the different connections using a set of rules – the key aspect of load balancing is that an individual user can never get a faster speed than the maximum speed of one of the individual connections. Bonding operates by combining all the connections into one virtual connection which means an individual user can experience a maximum speed which is the roughly the sum of the individual connections. However, there are downsides to bonding, for example there is an overhead to the bonding process which reduces the overall speed and typically the bonding process has to work based on the performance of the slowest connection which often means a bonded connection is quite inefficient.

Whenever you use the internet digital packets of data are being sent to and received from the website or service that you are using. Generally this happens incredibly quickly, just a few milliseconds to complete a round trip so you are unlikely to pay much attention to how long it takes, however, the Round Trip Time or Latency can be more significant on certain types of internet connections. A poor ADSL connection, for example, may be over 100ms but a satellite connection will always be over 600ms. This may not sound that significant but it is when you consider how many packets of data are going backwards and forwards. The effect of this latency is particularly significant for voice conversations and encrypted traffic such as VPNs.

ADSL is well known as a main technology for home broadband because it runs over regular telephone lines and is widely available. One of the downsides of ADSL is that it is asymmetric, meaning the download speed is much faster than the upload speed. Some offerings are also highly contended leading to erratic performance. It is still widely used on event sites because of its availability and short lead times for installation but it is being replaced by FTTC (Fibre to the Cabinet) and high-speed optic fibre services. As ADSL requires a copper wire connection it can only be activated once a traditional phone line is installed.

Satellite connectivity creates more questions and churn than any other form of connectivity, primarily because it is often mis-sold. A modern KA-based system is quick to deploy, relatively cheap and has enticing headline speeds of up to 20Mbps download and 6Mbps upload. However, there are some significant limitations, firstly the receiving dish requires ‘line of sight’ to the southern horizon which often means it needs some height to see over buildings trees.

Secondly, the standard consumer and business packages are contended meaning the available bandwidth is shared with other satellite users – this may be as high as 50:1 leading to erratic and poor performance. There are alternative services such as ‘Newspotter’ which have lower or no contention but the cost of these is significantly higher. The cost of data on satellite can also be quite expensive, especially outside of the standard packages.

The big problem with satellite is latency – the time it takes for packets of data to traverse up and down to the satellite, this will always be around 600ms and causes issues for any ‘real-time’ type applications such as online gaming, voice (VoIP), two way video (video streaming is fine) and most VPN services. Even normal web browsing is a very different experience on satellite compared to other methods of connectivity.

All things considered though satellite has its place and is widely used, for some needs such as video streaming or very quick deployments where no other service is available, it is ideal. The key point is setting expectations correctly.

With the advent of 4G the use of mobile broadband has increased significantly and in some areas the speed of the connection is good enough to support a number of users. For professional use high gain antennas can be used to improve the signal and often multiple 4G connections are aggregated to provide more capacity and improve reliability, especially when the connections are aggregated from different mobile operators.

Data costs can be significant so 3G/4G is not ideal for high volume, but most importantly 3G/4G cannot be relied on for critical services. Although there are ways to improve the service there is always the risk that the connectivity will become overloaded from other users in the area, this is particularly significant for events where the service may run fine until attendees arrive and then the performance falls off.

In towns and cities the service is quick to deploy and great for general ono-critical use, in more rural areas capacity can be an issue even if there is a relatively good signal strength.

With the increased availability and reducing price of optic fibre, EFM (Ethernet First Mile) is less common than it was a few years ago. EFM runs over traditional copper cables but in effect bonds a number of lines together to provide a synchronous, uncontended service, typically up to around 30Mbps. It requires special equipment in the telephone exchange and at the client end so is only available in certain exchanges – mainly large cities. Cost wise it is cheaper than optic fibre, however, for short term needs a WISP/Wireless Point-to-Point Link may be a more cost-effective option.

Getting high capacity internet connectivity to locations at short notice can be very tricky as generally there is a long lead time on any wired or fibre circuits. Using a wireless point-to-point link from a WISP (Wireless Internet Service Provider) can be a great solution if the conditions allow. As the name suggests these links are created using a special type of wireless adapter, typically from the roof of a building or a mast. In general these links require ‘line of sight’ between the two points and work at one of several frequencies to minimise interference. Depending on the location and distance speeds similar to optic fibre can be achieved.

Wireless point-to-point links are relatively expensive and do require some planning and assessment so are not suitable in all cases. There is also still a risk of interference causing problems even though special frequencies are used, however, for critical events this risk can be minimised by using two links from different locations running at different frequencies.

FTTC is more often known by the consumer marketing names such as BT Infinity. It is a stepping stone to true fibre (FTTP – Fibre to the Premises) as the last part of the service is still delivered over the existing copper wires, meaning that the headline speeds are only available within close proximity to an enabled cabinet – normally around 800m. The benefit over ADSL is that the upload speed is considerably better – anything up to around 19Mbps depending on various factors. Download speed is also generally much faster, typically with limits of around 50Mbps. The services are contended at different levels depending on the service purchased with business services offering a lower contention ratio.

The primary downside of FTTC is availability with many areas either not having it at all, or suffering limited roll-out. Often initial surveys will say that FTTC is available but at the time of install it is discovered that the particular cabinet which connects to the install location is not enabled for FTTC. Because FTTC is delivered over copper wires a traditional phone line has to be installed prior to the FTTC service being installed.

True optic fibre connections are the place where all events would ideally be – high speed, low contention, reliable and very low latency. Only a few years ago having a 100Mbps fibre connection on site was a luxury, now many sites are moving to 1Gbps fibre connections as demand continues to increase. Cost has fallen significantly over the last few years but it is still a significant investment which tends to only work where events will be using the same location for at least 3 years.

The primary problem with fibre is the lead-time to install – typically at least 3 months and often longer, coupled with what are known as ‘ECCs’ or Excess Construction Charges. Because a fibre connection requires an optic fibre cable all the way to the final location it often requires some significant physical installation – a new fibre being ‘blown’ through a duct or an actual physical duct being installed requiring ground works. Depending on the distance to the nearest location which has a fibre point these ECCs can run from just a few hundred pounds to over £100k! Any new fibre installation is subject to a survey after which any ECCs are detailed and the order can be cancelled.

There are many good aspects fo fibre though from normally a 1:1 contention ratio through to the ability to ‘burst’ capacity. Bursting means that a connection can be kept running at a much lower speed for most of the year with a ‘burst’ to a higher speed only when there is a requirement. This approach can keep the cost down significantly.

GDPR (7)

Yes, particularly in relation to public areas. For events consideration should be given to cameras which may be able to see people who are not part of the event, for example around entrances and the perimeter. For each camera an assessment should be undertaken and recorded including:

– Why do you need that camera there?

– What will the camera be used for?

– What else, if anything, could you do to achieve the same objective without having a camera

You must specifically have users ‘Opt In’ when collecting personal data. Plain language should be used to explain:

– Why you are collecting the data

– How it will be stored

– Who will have access to it

– What you will be doing with it

– How they can remove it from your systems

Examples of aspects which may impact privacy include:

– CCTV images of people who are not within the event (for example perimeter CCTV)

– Network usage data of users communicating with those outside of the event network

– Any customer data collected for customer marketing purposes

– Contractor personal details

You must consider all personal data including customers, suppliers, contractors and employees. You need to look at how it is collected, stored, shared with others (internally and externally) and how it is being accessed (internally and externally)

Under the new GDPR regulation, personal data is any information relating to an individual, whether it relates to his or her private, professional or public life. It can be anything from a name, a home address, a photo, an email address, bank details, posts on social networking websites, medical information, or a computer’s IP address.

The General Data Protection Regulation (GDPR) is a European Union regulation scheduled to come into effect on 25 May 2018 relating to personal data, its use and storage. It replaces the prior Data Protection Directive of 1995 and has far-reaching implications for any business that has a global presence. It impacts any business, EU-based or not, which has EU users or customers.

General Questions (5)

Many people complain that their broadband or internet seems to slow down in the evening and often this is true. The issue is that most broadband providers ‘contend’ their services for capacity reasons which means if everyone uses it at the same time there will not be enough capacity. This is how internet access is made affordable for home users, the providers bet that everyone won’t need it at the same time (a bit like a motorway with everyone at rush hour)

Evenings are generally peak time for home broadband usage with more people at home. The problem has become worse as streaming has become more popular with services such as BBC iPlayer and all this simultaneous demand simply exceeds the capacity at the local exchange leading to a drop in performance for all users. More expensive broadband services use a lower contention ratio which mitigates this problem.

It’s not unusual to be in the situation where you are waiting for a week or two for broadband to be installed in a new house or business premise which can be a real problem if there is not good 3G/4G coverage as an alternative. We are often asked whether we can provide a temporary solution and the answer is generally yes via solutions such as satellite, however, the cost of this is much higher than consumer broadband.

When you first connect an open Wi-Fi network such as a public one you are often greeted by a webpage which asks for some login information or sign-up details before you can proceed to use the internet. This is commonly called a splash page, hijack page or more correctly a ‘captive portal’ and is used to control access to the internet. These pages may be branded with logos and sponsor information and typically offer one of three types of access:

- Open access with just a ‘click to accept terms and conditions’

- Open access after entering some basic information such as an email address

- Authenticated access using a code or username/password approach

Post login the user may be redirected to a ‘landing’ page (often a page the provider wants to promote) or directed onwards to the original requested page.

On some public access Wi-Fi networks a user may only be allowed access to a limited number of websites unless they pay for premium access. This approach is known as a ‘walled garden’ with typically a number of websites ‘whitelisted’ which the provider wishes to promote or provide free access too.

Proxy servers have long been used to provide a level of content caching to improve performance and protection for users accessing the internet. Typically specific details of the proxy server are entered into the settings of the user’s preferred web browser. More often now these settings may be automatically downloaded by the way of a proxy configuration script. As more and more content has moved to be encrypted via https and is more likely to be dynamically generated, the ‘success’ rate of proxy cache hits has dropped meaning they are no longer very effective in many cases.

A variant of the standard proxy is the Transparent Proxy (also known as an intercepting or inline proxy) which intercepts communications at the network layer without any special client configuration. These devices are sometimes used by network carriers such as mobile phone operators as it gives them control over content. The problem with transparent proxies is that they have to work without creating any impact on the communication between the client and the server which is a complex task. The result is that unusual problems may be seen with web pages not loading correctly or protocols being blocked because the transparent proxy does not support them.

Whenever using a mobile network (or any provider where the path to the internet is not explicitly known) for a critical event or launch it is important to test any applications or websites prior to the event to ensure they work correctly.

Payment Systems/PDQs (4)

There are four primary types of PDQ (Process Data Quickly) machine or ‘chip and pin’ terminals

- Telephone Line (PSTN – Public Switched Telephone Network) – This is the oldest and, until a few years ago, the most common type of device, it requires a physical telephone line between the PDQ modem and the bank. It’s slow, difficult and very costly to use at event sites because of the need for a dedicated physical phone line, however, once it is working it is reliable.

- Mobile PDQ (GPRS/GSM) – Currently the most common form of PDQ, it uses a SIM card to connect to a mobile network to use GSM or GPRS to connect back to the bank. Originally seen as the go anywhere device, in the right situation they are excellent, however, they have limitations, the most obvious being they require a working mobile network to operate. At busy event sites the mobile networks rapidly become saturated and this means the devices cannot connect reliably. As they use older GPRS/GSM technology they are also very slow – it doesn’t make any difference if you try and use the device in a 4G area – it can only work using GPRS/GSM. As they use the mobile operator networks they may also incur data charges.

- Wi-Fi PDQ – Increasingly common, this version connects to a Wi-Fi network to get its connectivity to the bank. On the surface this sounds like a great solution but there some challenges, firstly it needs a good, reliable Wi-Fi network. The second issue is that many Wi-Fi PDQs still operate on the 2.4GHz Wi-Fi spectrum which on event sites is heavily congested and suffers lots of interference making the devices unreliable. This is not helped by the relative weak Wi-Fi components in a PDQ compared to a laptop for example. It is essential to check that any Wi-Fi PDQ is capable of operating in the less congested 5GHz spectrum.

- Wired IP PDQ – Often maligned because people think it doesn’t have a ‘wireless’ handset, but they are actually the same as all the others and have a wireless handset but it uses a physical wire (cat5) from the base station to connect to a network. In this case the network is a computer network using TCPIP and the transactions are routed in encrypted form across the internet. If a suitable network is available on an event site then this type of device is the most reliable and fastest, and there are no call charges.

All of these units look very similar and in fact can be built to operate in any of the four modes, however, because banks ‘certify’ units they generally only approve one type of connectivity in a particular device. This is slowly starting to change but the vast majority of PDQs in the market today can only operate on one type of connectivity and this is not user configurable.

On top of these aspects there is also the difference between ‘chip & pin’ and ‘contactless’. Older PDQs typically can only take ‘chip & pin’ cards whereas newer devices should also be enabled for contactless transactions.

Electronic Point of Sale (EPOS) systems are now commonplace at events and often require access to a network for communications between units, configuration management and sometimes stock control integration. Some EPOS systems have integrated credit card payment terminals whereas others use external PDQs. Generally EPOS systems require a wired cat5 connection to the network and this needs to be factored into requirements.

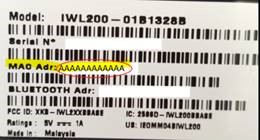

Every piece of network equipment has a MAC address (Media Access Control address) which is used to uniquely identify it and this includes PDQs. On event sites knowing the MAC address of PDQs is important so that they can be identified on the network for purposes such as ‘whitelisting’ which enables the device to work on a secure network without having to enter login information for example.

The MAC address can usually be found on the back of the base unit/docking station and begins with “MAC No. XXXXXXXXXXXXX”

Please see an example below which can help identify the MAC address.

If you can’t see a MAC address it might be that your PDQ is not network compatible and may require a phone line. The best thing to do is call your merchant provider and ask them.

Mobile PDQs need internet connectivity of some form to operate – some are designed to use Wi-Fi, some use a wired network connection but most use the mobile phone network to connect. The connection to the mobile phone network in most PDQ terminals is GPRS rather than 3G or 4G. On a busy event site the mobile PDQ has to contend with all the other devices trying to connect to the mobile network which often struggles with demand so PDQ transactions fail. GPRS terminals are particularly prone to problems as it is an old and slow technology which does not perform well when under heavy load.

The best approach at events is to use a Wi-Fi or wired IP terminal as these avoid the mobile networks, connecting to a dedicated network for payments instead. Many events offer a rental service or suggest a recommended supplier.

Resources and Checklists (1)

Download the Event IT Checklist document here

A simple set of questions which can be used to review connectivity at venues assessing risks and considerations.

Site Networking (3)

The design of computer networks tends to be based on their size and the specific needs of different user groups for aspects such as security and performance. The design can be very complex but roughly breaks into three categories:

Flat Network

This is the most basic network and, as the name suggests, is totally flat meaning that all devices can see one another and there is little control on the network, which has led to them being known as ‘unmanaged’ networks. Flat networks are only used in the smallest and most basic of set-ups as they have a number of inherent issues such as potential network storms, limited security and poor traffic routing.

Managed Network / Layer 2

A managed network is a step up from an unmanaged network as it introduces VLANs (Virtual LANs) providing the capability to separate the network into a number of virtual networks offering more security and ability to shape and route traffic. Layer 2 networks are fine for medium sized networks but can still suffer from the risk of network storms or performance issues as they grow because in effect they are still one big network.

Routed Network / Layer 3

The next step up is a fully routed network or ‘layer 3’ network. This type of network uses multiple routers to create totally separate zones improving performance and offering better options for redundant paths, security and isolation. A key point is that an issue in one zone has no impact on any other zone. They are more complex to set-up but are particularly suited to scenarios where, for example, an event site wants to have a redundant optic fibre ring between all key locations for the best performance and reliability. We use layer 3 networks on all the major sites on which we operate.

Event sites can be challenging for IT infrastructure due to the way the build progresses, lack of routes for cables and last minute changes. There are several techniques used to deploy the required infrastructure across a site and one approach is the use of ‘wireless links’, also known as Point-to-Point (PTP) or Point-to-MultiPoint (PTMP) links. These units are like a special form of Wi-Fi, generally operating in the 5GHz spectrum and often using a focused beam to improve performance.

Wireless links can be a great way of getting connectivity to difficult parts of a site but they have to used carefully to ensure they do not interfere with each other. Structures and trees especially can block the signal leading to drop-outs or poor performance so generally they have to be used with ‘line of sight’.

On an event site it is very important for there to be central coordination of wireless spectrum usage otherwise there can be a great deal of interference which prevents links from working correctly.

Cat5 is the primary type of cable used for networking and is used to connect up most pieces of equipment on site such as phones, switches and CCTV cameras. The only downside of cat5 is that the maximum cable length is 100m, however, with special in-line adapters known as PoE extenders this can be increased to 200 or 300m. Cat5 is particularly flexible as it can also carry power for certain types of equipment such as phones which means no additional power is required where the device is located. Generally cat5 cable is cable of speeds up to 1Gbps, although the actual speed experienced may be reduced by switching equipment and the speed of the connection to the internet.

Increasingly optic fibre cable is being used for core links on sites as it can run at higher speeds over much larger distances. Some types of optic fibre cable also offer a much stronger shield which means they can withstand the event environment better than cat5. The downside of optic fibre is it requires special tools to terminate and connect, and it is more difficult to join if it gets cut.

Voice Communication (4)

The PSTN line has been around for more than 100 years and is still a critical part of communications infrastructure. It is a simple two wire copper cable that carries the voice service and, more often than not, an ADSL broadband or FTTC service. It is reliable, doesn’t require any power, can run over large distances and is simple to connect. With such a huge existing country-wide infrastructure lines can often be installed very quickly.

Installation and monthly costs have been rising though meaning that for multiple lines, other than essential emergency ones, VoIP (Voice over IP) is a much more cost effective solution because it uses the digital network that nearly all event sites now have.

Technically speaking ISDN is a data service rather than voice, being the early digital solution before ADSL. It was for many years though the way of delivering multiple voice connections effectively and is still very popular in the world of radio broadcast. Its popularity stems from the fact it creates an end-to-end connection between, for example, the outside broadcast location and the studio meaning there is very low latency and high reliability. ISDN carries a significant premium in terms of cost above PSTN and is not quite so widely available.

The use of ISDN is falling rapidly due to its cost and the increasing confidence of broadcasters in using newer IP based solutions which run over existing internet connections.

Traditional phone lines use a physical connection which means every phone needs to have a separate wire. With the advent of modern networking VoIP phones can operate over the computer network providing a much more flexible approach to deployment. In addition VoIP call charges are lower than traditional phones providing an opportunity for cost saving. VoIP phones can also provide a wider range of features such as voicemail, caller line identification, call forwarding, call waiting, etc. On an event site the phones connect to the rest of the phone network via an internet gateway which may be connected to most of the forms of internet connectivity.

The Mobile Network Operators (MNOs) such as Vodafone and EE use an entirely separate set of networks for their services compared to Wi-Fi and computer networking. On an event site devices such as mobile phones can use any onsite Wi-Fi for data but not natively for their calls. Some operators are now releasing applications which facilitate this but it depends on the operator and specific contract. The coverage and capacity of the mobile networks on an event site is entirely controlled by the mobile operators and there is no legal way to improve this without their assistance.

Wi-Fi (4)

If you talk to a technical person about Wi-Fi then eventually the subject of 2.4GHz comes up along with a list of issues. The root of this goes back to the early days of Wi-Fi and how wireless spectrum was allocated. Wi-Fi currently operates in two frequency bands – 2.4GHz and 5GHz – however until recently the vast majority of devices and equipment only operated in the 2.4GHz spectrum, this was due to early aspects of licencing and manufacturing which meant a rapid adoption of 2.4GHz and a much slower rate of adoption of 5GHz.

The problem is that the 2.4GHz frequency band is not just used by Wi-Fi, it shares it with Bluetooth, baby monitors, various audio & video senders and pretty much anything else that needs an unlicensed frequency band. It is also the frequency that microwave ovens use and yes that can cause problems in kitchens! The upshot is that the frequency band is overcrowded meaning that Wi-Fi is fighting amongst a lot of wireless noise, generally leading to reduced or intermittent performance.

On top of this the actual spectrum available to Wi-Fi at 2.4GHz is very limited – in theory there are 13 channels but in reality only 3 of these channels are usable without causing interference to other channels which makes it very difficult to design large scale deployments. The situation is so bad on event sites that 2.4GHz can be almost unusable. The good news is that most mobile device manufacturers have increasingly incorporated 5GHz support into their devices over the last few years.

Overall the 5GHz band has a much wider spectrum allocated meaning more channels are available and there is less interference from other devices (although RADAR does use 5GHz, as does some metrological equipment). Today 5GHz is much less crowded than 2.4GHz and provides a much better user experience, however, with the widescale adoption of 5GHz in consumer products such as Mi-Fi units the situation is changing so we may well see increasing problems at 5GHz over time.

Most Wi-Fi deployments are set-up to deal with a relatively low number of concurrent users, often only 10s or 100s of users in a fairly wide area such as a cafe or an office. This approach is straightforward requiring a small number of wireless access points. At many events the level of concurrent users may easily be in the thousands and typically in highly concentrated areas. To provide a good service to this density of users requires a much more complex approach.

The challenge is because an individual wireless access point can only support a limited number of users, even a high-end professional unit will only service around 100-200 users in an effective way depending on the type of usage. However, it is not quite as simple as just adding more wireless access points because unless careful attention is paid to the design the wireless access points will interfere with each other and lead to more problems.

Many large Wi-Fi deployments suffer from poor design leading to a bad user experience, true ‘high density’ design requires careful analysis of wireless spectrum and often uses special equipment to control the spread of wireless signals.

Because Wi-Fi is a broadcast technology that passes through the open air anyone with the right equipment can pick up the signal, for this reason it is very important that these signals are encrypted to avoid information being intercepted by the wrong people. One of the most common ways of encrypting a Wi-Fi network is by using a technology called WPA2 – Wi-Fi Protected Access.

WPA2 is commonly set-up with a Pre-Shared Key (PSK), this alphanumeric string should only be known by those who need access to the network and they enter the key when they are connecting to the network. The potential problem with this approach is that the PSK is used to generate the encryption key and if you use a weak key then the network is left open to a fairly simple attack which can gain access to the network within minutes.

The solution is simple – longer and more complex keys! For every character added the cracking process becomes considerably harder by a factor of compute years. The question is how long. There is no agreed answer on this as it depends on how random the key is. A truly random key of 10 alphanumeric characters is actually very hard to break, taking many years but a similar length key using dictionary words could be broken very quickly.

To be safe we normally recommend a minimum of 12 characters with typical password rules – upper and lower case, numeric characters, special characters and no dictionary words unless they have character replacements.

Of course a strong key only remains strong whilst it is only known by those who should know it and this is a weakness of the shared key approach as if the key is leaked, security across the network is compromised. There are additional factors that can be introduced to improve security further – for example one technique is called Dynamic Pre-Shared Key (D-PSK) which uses dynamic, unique keys for each user so there is no risk of a leaked key. Other more advanced set-ups use electronic certificates rather than passwords. With the right set-up Wi-Fi networks are perfectly secure – more so than most wired networks!

Wi-Fi encryption is generally used to stop unintended people snooping on your Wi-Fi traffic, however, by default all users that are legitimately connected to a Wi-Fi network may be able to see each others network traffic. Most Wi-Fi systems have a feature known as ‘client isolation’ which, once enabled, blocks users from accessing information destined for another user. Sometimes this can be controlled at a wireless access point level or network level. The more advanced configurations can in effect only allow a user access to the internet and block access to any other internal devices or services.

Although client isolation is an effective technique on a local Wi-Fi network it is not intended as a replacement for technologies such as VPN (Virtual Private Network) where an encrypted end-to-end tunnel is created. Client isolation is particularly useful for blocking peer-to-peer services on a large network.