“You guys do Wi-Fi at events right?” typically is the way most people remember us, the irony that the invisible part of our service is in reality the most visible. Unless you know what you are looking for at a large event site you are unlikely to notice the extensive array of technology quietly beating away like a heart.

From walking up to the entrance and having your ticket scanned, watching screens and digital signage, using a smartphone app or buying something on your credit card before you leave, today’s event experience is woven with technology touchpoints. Watching a live stream remotely or scrolling through social media content also rely on an infrastructure which supports attendees, the production team, artists, stewards, security, traders & exhibitors, broadcasters, sponsors and just about everyone else involved.

During a big event the humble cables and components which enable all of this may deal with over 25 billion individual electronic packets of data – all of which have to be delivered to the correct location in milliseconds.

In the first of three blogs looking behind the scenes we take a look at how the core network infrastructure is put together.

Let’s Get Physical

When an event organiser starts the build for an event, often several weeks before live, one of the first things they need is connectivity to the internet. Our team arrives at the same time as the cabins and power to deliver what we call First Day Services – a mix of internet connectivity, Wi-Fi and VoIP telephony for the production team.

Connectivity may be provided by traditional copper services such as ADSL or via satellite but more typically is now via optical fibre or a wireless point to point link as the demands on internet access capacity are ever increasing. Even 100Mbps optic fibre connections are rapidly being surpassed with a need for 1Gbps fibre circuits.

PSTN, ISDN, ADSL and fibre all are commonplace on a big site

Wireless point-to-point links relay connectivity from a nearby datacentre or other point of presence, however, this introduces additional complexity with the need for tall, stable masts at each end of the link to create the ‘line of sight’ required for a point to point link. To avoid interference and improve speeds the latest generations of links now utilise frequencies as high as 24GHz and 60GHz to provide speeds over 1Gbps. Even with the reliability of fibre and modern wireless links it is still key to have a redundant link too so a second connection is used in parallel to provide a backup.

From there on the network infrastructure is built out alongside the rest of the event infrastructure working closely with the event build schedule. Planning is critical with many sites requiring a network infrastructure as complex as a large company head office, which must be delivered in a matter of days over a large area.

The backbone on many sites is an extensive optical fibre network covering several kilometres and running between the key locations to provide the gigabit and above speeds expected. On some sites a proportion of the fibre is installed permanently – buried into the ground and presented in special cabinets – but in most cases it is loose laid, soft dug, flown, ducted, and ramped around the site. Pulling armoured or CST (corrugated steel tube) fibre over hundreds of metres at a time through bushes, trees, ditches and over structures is no easy task!

Optical fibre cable can run over much longer lengths than copper cable whilst maintaining high speeds, however, it is harder to work with requiring, for example, an exotically named ‘fusion splicer’ to join fibre cores together. On one current event which uses a mix of 8, 16 and 24 core fibre there are over 1,200 terminations and splices on the 5.5km of fibre. With the network now a critical element redundancy is important so the fibre is deployed in ‘rings’ so that all locations are serviced from two independent pieces of fibre – a tactic known as ‘diverse routing’ – so that if one piece of fibre becomes damaged the network continues to operate at full speed.

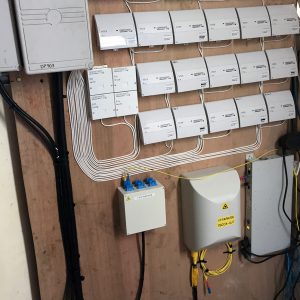

Each secure fibre break-out point, known as a Point of Presence (POP), is furnished with routing and switching hardware within a special weatherproof and temperature controlled cabinet to connect up the copper cabling which is used to provide the services at the end point such as VoIP phones, Wi-Fi Access points, PDQs and CCTV cameras.

Each cabinet is fed power from the nearest generator on a 16-amp feed and contains a UPS (Uninterruptible Power Supply) to clean up any power spikes and ensure that if the power fails not only does everything keep running on battery but also an alert is generated so that the power can be restored before the battery runs out.

Although wireless technology is used on sites there is still a lot of traditional copper cabling using CAT5 as this means power can be delivered along the same cable to the end device. Another aspect is speed, with most wireless devices limited to around 450Mbps and shared between multiple users the actual speed is too low for demanding services, whereas CAT5 will happily run at 1Gbps to each user.

For critical reliability wireless also has risks from interference so where possible it is kept to non-critical services but there are always times when it is the only option so dedicated ‘Point-to-Point’ links are used – these are similar to normal Wi-Fi but use special antennas and protocols to improve performance and reliability.

A head for heights is important for some installs!

Another significant technology on site is VDSL (Very High Bit-Rate DSL), similar in nature to ADSL used at home but run in a closed environment and at much higher speeds. It is the same technology as is used for the BT Infinity service enabling high speed connections over a copper cable up to around 800m in length (as opposed to 100m for Ethernet).

All of these approaches are used to build out the network to each location which requires a network service be it a payment terminal (PDQ) on a stand to a CCTV camera perched high up on a stage. Although there is a detailed site plan, event sites are always subject to changes so our teams have to think on their feet as the site evolves during the build period. Running cables to the top of structures and marquees can be particularly difficult requiring the use of cherry pickers to get the required height.

After the event all of the fibre is coiled back up and sent back to our warehouse for re-use and storage. The copper cable is also gathered up but is not suitable for re-use so instead it is all recycled.

The deployment of the core network is a heavy lift in terms of physical effort but the next step is just as demanding – the logical network is how everything is configured to work together using many ‘virtual networks’ and routing protocols. In part 2 we will take a look at the logical network and the magic behind it.

Photo Credit: Fibre Optic via photopin (license)

Sorry to disappoint, but yes our blog last week on

Sorry to disappoint, but yes our blog last week on