In part one of this series we looked at the physical network, part two covered the logical network and now in the third and final part we reach the edge network. Everything that has gone before is purely to enable the users and devices which connect to the network to deliver a service. For this blog we’ll take a journey through the different user groups and look at how the network services their requirements and the way technology is changing events.

Event Production

Making everything tick along from the first day of build until the last day of derig is a team of dedicated production staff working no matter whatever the weather. It is perhaps obvious that they all need internet access but the breadth of requirements increases year on year. Email and web browsing is only a part of the demand with applications such as cloud based collaboration tools sharing CAD designs and site layouts, along with event management applications dealing with staff, volunteers, traders, suppliers and contractors all being part of the wider consumption of bandwidth.

Just about everything to do with the delivery of an event these days is done in a connected way and as such reliable connectivity is as important as power and water.

Across the site, indoors and outdoors are carefully positioned high capacity Wi-Fi access points delivering 2.4GHz and 5GHz wireless connectivity to all the key areas such as site production, technical production, stewarding, security, gates and box offices. Different Wi-Fi networks service different users – from encrypted and authenticated production networks to open public networks – each managed with specific speeds and priorities. To deliver a good experience to the high density of users’ careful wireless spectrum management is essential, in some cases using directional antennas to focus the Wi-Fi signal in specific directions (rather like using a torch to focus light in a specific area). With so many wireless systems used on event sites interference can be a real challenge so wireless scanners are used to look for potential problems with active management and control used to make sure there are no ‘rogues’.

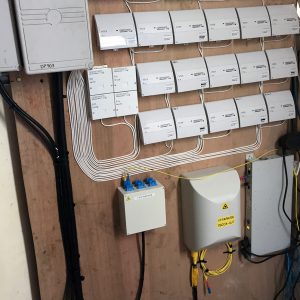

Not everything is wireless though, many devices, such as VoIP phones and some users require a wired connection so many cabins have to be wired to from network switches. Some sites may have over 200 VoIP (Voice over IP) phones providing lines for aspects such as enquiries, complaints, box offices, emergency services as well as a reliable communications network where there is no mobile service or the service struggles once attendees arrive. Temporary cabins play host to array of IT equipment such as printers, plotters and file servers all of which need to be connected.

As equipment evolves more and more devices are becoming network enabled, for example power is a big part of the site production with an array of generators across the site. The criticality is such that a modern generator can be hooked into the network like any other device to be monitored and managed remotely. On big sites even the 2-way radios may be relayed between transmitters across the IP network. Technical production teams also use the network to test the sound levels & EQ from different places.

Event Control

Once an event is running it is event control that becomes the hub of all activity. Alongside laptops, iPads and phones, large screens display live CCTV image from around the site – anywhere from two to over a hundred cameras may be sending in high definition video streams with operators controlling the PTZ (Pan/Tilt/Zoom) functionality as they deal with incidents. A modern PTZ camera provides an incredible level of detail with a high optical zoom, image stabilisation, motion detection and tracking, picture enhancement and low light/infra-red capability. CCTV may be thought of as intrusive but at events its role is very broad playing as much a part in monitoring crowd flows, traffic management and locating lost children as it is in assisting with crime prevention.

These cameras may be 30m up but they can deliver incredibly detailed images across a wide area

Full-HD and 4K Ultra HD cameras can deliver video streams upwards of 10Mbps, with 360 degree panoramic cameras reaching 25Mbps depending on frame rate and quality, this creates many terabytes of data which has to be archived ready to be used as evidence if needed, requiring high capacity servers to both record and stream the content to viewers. One event this year created over 12TB of data – the equivalent of 2,615 DVDs!

As everything is digital, playback is immediate allowing incidents to be quickly identified and footage or photos to be distributed in minutes. Content is not only displayed in a main control room but is also available on mobile devices both on the site and at additional remote locations.

Special cameras provide additional features such as Automatic Number Plate Recognition (ANPR) for use at vehicle entrances or people counting capability to assist with crowd management. Body cameras are becoming more common and now drone cameras are starting to play a part.

At the gates staff are busy scanning tickets or wristbands, checking for validity and duplication in real-time across the network back to central servers. The entrance data feeds to event control so they can see how many people have entered so far and where queues may be building. Charts show whether flow is increasing or decreasing so that staff can be allocated as needed.

For music events especially, noise monitoring is important and this often requires real-time noise levels to be reported across the network from monitors placed outside the perimeter of the event. Other monitors are increasingly important, ranging from wind-speed to water levels in ‘bladders’ used for storing water on site. The advent of cheap GPS trackers is also facilitating better monitoring of large plant and key staff.

External information is also important for event control with live information required on weather, transport, news and increasingly social media. Sources such as Twitter and Facebook are scanned for relevant posts – anything from complaints about toilets to potential trouble spots.

Bars, Catering, Traders & Exhibitors

For those at an event selling anything from beer to hammocks, electronic payment systems have been one of the biggest growth areas. From more traditional EPOS (Electronic Point of Sales) systems through to chip & pin/contactless PDQs, Apple Pay, iZettle and other non-cash based solutions. These systems are particularly critical in nature transacting many hundreds of thousands of pounds during an event with some sites deploying hundreds of terminals.

High volume sales such as bars also require stock management systems linking both onsite and offsite distribution to ensure stocks are maintained at an appropriate level. A recent development is traders operating more of a virtual stand with limited stock on site, instead the customer browses online on a tablet to order and have the product delivered to home after the event.

Sponsors

Most events have an element of sponsorship with each brand wanting to lead the pack in terms of innovation and creativity. Invariably these ‘activations’ involve technology in some form – from basic internet access to more involved interaction using technology such as RFID, GPS, augmented reality and virtual reality.

There are often multiple agencies and suppliers involved with a short window in which to deploy and test just as the rest of the event is reaching its peak of build activity. To be exciting the sponsor wants it to be ‘leading edge’ (or ‘bleeding edge’ as it is sometimes known!), which typically means on the fly testing and fixing.

Media & Broadcast

Busy media centres create demanding technical environments

From a gaggle of photographers wanting to upload their photos to a mobile broadcast centre, the reliance on technology is huge at a big event. Live streaming is increasingly important, both across the site and also out to content distribution networks. These often require special arrangements with guaranteed bandwidth and QoS (Quality of Service) controls to ensure the video or audio stream is not interrupted. It is not unusual to get requests for upwards of 200Mbps for an individual broadcaster.

More and more broadcasters are moving to IP solutions (away from dedicated broadcast circuits) requiring higher capacity and redundancy to ensure the highest availability. These demands increasingly require fibre to the truck or cabin with dedicated fibre runs back to a core hub.

Alongside content distribution, good quality, high density Wi-Fi is essential in a crowded media centre with the emphasis on fast upload speeds. Encoders and decoders are used to distribute video streams around a site creating IPTV networks for both real-time viewing and VoD (Video-on-Demand) applications. The next growth area is 360 degree cameras used to provide a more immersive experience both onsite and for remote watchers.

Attendees

Then after all this there may be public Wi-Fi. For wide-scale public Wi-Fi (as opposed to a small hotspot) it is typical over the duration of an event for at least 50% of the attendees to use the network at some point – the usage being higher when event specific features are promoted such as smartphone apps and event sponsor activities.

The step-up from normal production services to a large scale public Wi-Fi deployment is significant – a typical production network would be unlikely to see more than 1,000 simultaneous users, but a big public network can see that rise beyond 10,000, requiring higher density and complex network design, as well as significantly greater backhaul connectivity with public usage pulling many terabytes of data over a few days.

With a significant number of users, a large amount of data can be collected anonymously and displayed using an approach known as heat mapping to show where the highest density of users are and how users move around an event site. This information is very useful for planning and event management.

Public Wi-Fi has to deal with thousands of simultaneous connections

Break It Down

As the final band is doing their encore, or the show announces it is time to close the team switch to follow the carefully designed break down plan. What can take weeks to build is removed within a couple of days, loaded into lorries, shipped back to the warehouse to be reconfigured and sent out to next event. Sometimes tight scheduling means equipment goes straight from one country or job to the next. But not everything is removed at once, a subset of services remains for the organisers whilst they clear the site until the last cabin is lifted onto a lorry and we remove the last Wi-Fi access point and phone.

The change over the last five years has been rapid and shows no sign of slowing down as demand increases and services evolve. Services such as personal live streaming, augmented reality, location tracking and other interactive features are all continuing to push demands further.

So yes we provide the Wi-Fi at events but when you see an Etherlive event network on your phone spare a thought as to what goes on behind the scenes.